At which layer of the Open Systems Interconnect (OSI) model are the source and destination address for a datagram handled?

Transport Layer

Data-Link Layer

Network Layer

Application Layer

According to the CISSP Official (ISC)2 Practice Tests3, the layer of the Open Systems Interconnect (OSI) model that handles the source and destination address for a datagram is the Network Layer. The OSI model is a conceptual framework that defines the functions, services, and protocols of the communication system or network, as well as the interactions and interfaces among them. The OSI model consists of seven layers, each of which performs a specific function or service for the communication system or network, such as the Physical Layer, the Data-Link Layer, the Network Layer, the Transport Layer, the Session Layer, the Presentation Layer, or the Application Layer. The Network Layer is the third layer of the OSI model, which provides the functionality and service of routing and forwarding the data or information across the communication system or network, such as the Internet Protocol (IP) or the Internet Control Message Protocol (ICMP). The Network Layer handles the source and destination address for a datagram, which is a unit or a packet of data or information that is transmitted or received over the communication system or network. The source and destination address for a datagram are the logical or numerical identifiers that specify the origin and the destination of the datagram, such as the IP address or the host name of the sender and the receiver of the datagram. The Network Layer uses the source and destination address for a datagram to determine the best path or route for the datagram to travel from the sender to the receiver, as well as to deliver the datagram to the correct destination. The Transport Layer is not the layer of the OSI model that handles the source and destination address for a datagram, although it may be the layer that handles the source and destination port for a segment. The Transport Layer is the fourth layer of the OSI model, which provides the functionality and service of ensuring the reliable and efficient transmission and reception of the data or information across the communication system or network, such as the Transmission Control Protocol (TCP) or the User Datagram Protocol (UDP). The Transport Layer handles the source and destination port for a segment, which is a unit or a packet of data or information that is transmitted or received over the communication system or network. The source and destination port for a segment are the logical or numerical identifiers that specify the application or the service that is sending or receiving the segment, such as the port number or the socket number of the application or the service. The Transport Layer uses the source and destination port for a segment to establish, maintain, and terminate the connection or the session between the sender and the receiver, as well as to deliver the segment to the correct application or service. The Data-Link Layer is not the layer of the OSI model that handles the source and destination address for a datagram, although, it may be the layer that handles the source and destination address for a frame. The Data-Link Layer is the second layer of the OSI model, which provides the functionality and service of transferring and exchanging the data or information between the adjacent nodes or devices on the communication system or network, such as the Ethernet, the Wi-Fi, or the Bluetooth. The Data-Link Layer handles the source and destination address for a frame, which is a unit or a packet of data or information that is transmitted or received over the communication system or network. The source and destination address for a frame are the physical or hardware identifiers that specify the node or the device that is sending or receiving the frame, such as the Media Access Control (MAC) address or the Physical Address of the node or the device. The Data-Link Layer uses the source and destination address for a frame to identify, locate, and access the node or the device that is sending or receiving the frame, as well as to deliver the frame to the correct node or device. The Application Layer is not the layer of the OSI model that handles the source and destination address for a datagram, although it may be the layer that handles the source and destination address for a message.

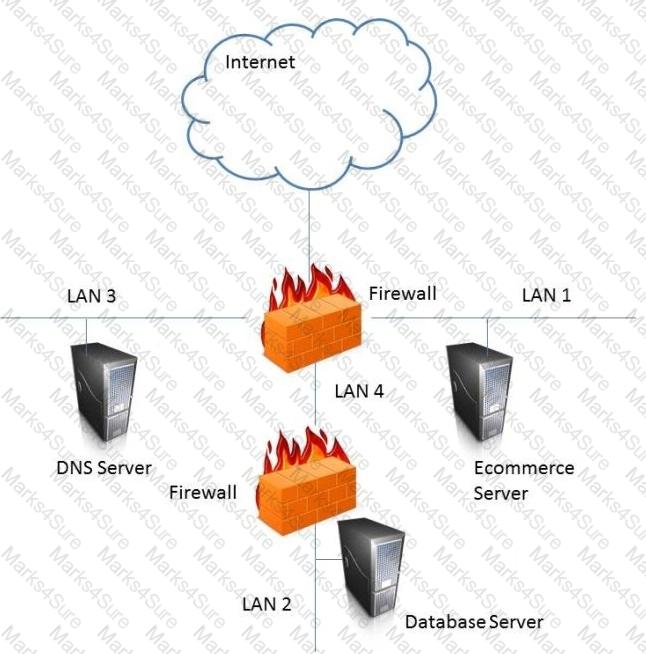

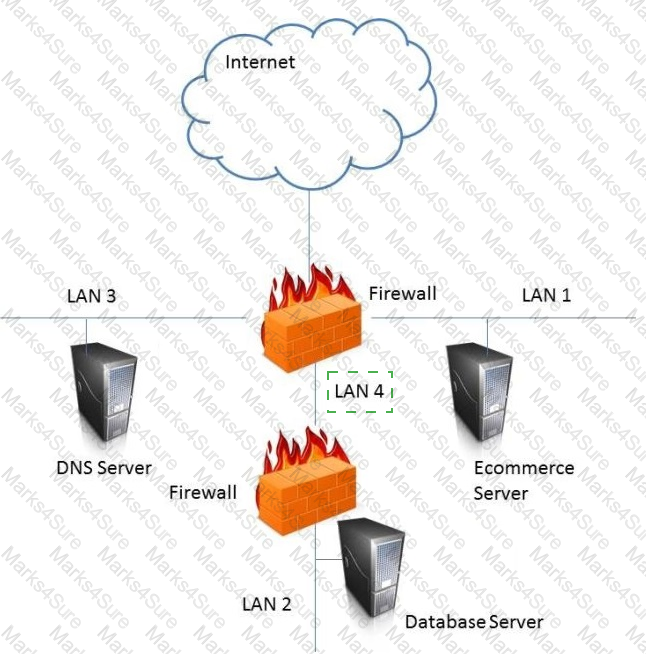

In the network design below, where is the MOST secure Local Area Network (LAN) segment to deploy a Wireless Access Point (WAP) that provides contractors access to the Internet and authorized enterprise services?

LAN 4

The most secure LAN segment to deploy a WAP that provides contractors access to the Internet and authorized enterprise services is LAN 4. A WAP is a device that enables wireless devices to connect to a wired network using Wi-Fi, Bluetooth, or other wireless standards. A WAP can provide convenience and mobility for the users, but it can also introduce security risks, such as unauthorized access, eavesdropping, interference, or rogue access points. Therefore, a WAP should be deployed in a secure LAN segment that can isolate the wireless traffic from the rest of the network and apply appropriate security controls and policies. LAN 4 is connected to the firewall that separates it from the other LAN segments and the Internet. This firewall can provide network segmentation, filtering, and monitoring for the WAP and the wireless devices. The firewall can also enforce the access rules and policies for the contractors, such as allowing them to access the Internet and some authorized enterprise services, but not the other LAN segments that may contain sensitive or critical data or systems34 References: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 6: Communication and Network Security, p. 317; Official (ISC)2 CISSP CBK Reference, Fifth Edition, Domain 4: Communication and Network Security, p. 437.

When designing a vulnerability test, which one of the following is likely to give the BEST indication of what components currently operate on the network?

Topology diagrams

Mapping tools

Asset register

Ping testing

According to the CISSP All-in-One Exam Guide2, when designing a vulnerability test, mapping tools are likely to give the best indication of what components currently operate on the network. Mapping tools are software applications that scan and discover the network topology, devices, services, and protocols. They can provide a graphical representation of the network structure and components, as well as detailed information about each node and connection. Mapping tools can help identify potential vulnerabilities and weaknesses in the network configuration and architecture, as well as the exposure and attack surface of the network. Topology diagrams are not likely to give the best indication of what components currently operate on the network, as they may be outdated, inaccurate, or incomplete. Topology diagrams are static and abstract representations of the network layout and design, but they may not reflect the actual and dynamic state of the network. Asset register is not likely to give the best indication of what components currently operate on the network, as it may be outdated, inaccurate, or incomplete. Asset register is a document that lists and categorizes the assets owned by an organization, such as hardware, software, data, and personnel. However, it may not capture the current status, configuration, and interconnection of the assets, as well as the changes and updates that occur over time. Ping testing is not likely to give the best indication of what components currently operate on the network, as it is a simple and limited technique that only checks the availability and response time of a host. Ping testing is a network utility that sends an echo request packet to a target host and waits for an echo reply packet. It can measure the connectivity and latency of the host, but it cannot provide detailed information about the host’s characteristics, services, and vulnerabilities. References: 2

Which one of the following activities would present a significant security risk to organizations when employing a Virtual Private Network (VPN) solution?

VPN bandwidth

Simultaneous connection to other networks

Users with Internet Protocol (IP) addressing conflicts

Remote users with administrative rights

According to the CISSP For Dummies4, the activity that would present a significant security risk to organizations when employing a VPN solution is simultaneous connection to other networks. A VPN is a technology that creates a secure and encrypted tunnel over a public or untrusted network, such as the internet, to connect remote users or sites to the organization’s private network, such as the intranet. A VPN provides security and privacy for the data and communication that are transmitted over the tunnel, as well as access to the network resources and services that are available on the private network. However, a VPN also introduces some security risks and challenges, such as configuration errors, authentication issues, malware infections, or data leakage. One of the security risks of a VPN is simultaneous connection to other networks, which occurs when a VPN user connects to the organization’s private network and another network at the same time, such as a home network, a public Wi-Fi network, or a malicious network. This creates a potential vulnerability or backdoor for the attackers to access or compromise the organization’s private network, by exploiting the weaker security or lower trust of the other network. Therefore, the organization should implement and enforce policies and controls to prevent or restrict the simultaneous connection to other networks when using a VPN solution. VPN bandwidth is not an activity that would present a significant security risk to organizations when employing a VPN solution, although it may be a factor that affects the performance and availability of the VPN solution. VPN bandwidth is the amount of data that can be transmitted or received over the VPN tunnel per unit of time, which depends on the speed and capacity of the network connection, the encryption and compression methods, the traffic load, and the network congestion. VPN bandwidth may limit the quality and efficiency of the data and communication that are transmitted over the VPN tunnel, but it does not directly pose a significant security risk to the organization’s private network. Users with IP addressing conflicts is not an activity that would present a significant security risk to organizations when employing a VPN solution, although it may be a factor that causes errors and disruptions in the VPN solution. IP addressing conflicts occur when two or more devices or hosts on the same network have the same IP address, which is a unique identifier that is assigned to each device or host to communicate over the network.

During a fingerprint verification process, which of the following is used to verify identity and authentication?

A pressure value is compared with a stored template

Sets of digits are matched with stored values

A hash table is matched to a database of stored value

A template of minutiae is compared with a stored template

The method that is used to verify identity and authentication during a fingerprint verification process is that a template of minutiae is compared with a stored template. A fingerprint verification process is a type of biometric verification process that uses or applies the fingerprint or the impression of the finger of the user or the device, to verify or to authenticate the identity or the authenticity of the user or the device, and to grant or to deny the access or the login to the system or the service. A fingerprint verification process can provide a high level of security or protection for the system or the service, as it can prevent or reduce the risk of impersonation, duplication, or sharing of the fingerprint or the impression of the finger of the user or the device. The method that is used to verify identity and authentication during a fingerprint verification process is that a template of minutiae is compared with a stored template, which means that the fingerprint or the impression of the finger of the user or the device that is captured or scanned by the fingerprint scanner or the sensor, is converted or transformed into a template or a model of minutiae, which are the points or the features of the fingerprint or the impression of the finger of the user or the device, such as the ridge ending, the bifurcation, or the delta, and that are used or applied for the fingerprint verification process, and that the template or the model of minutiae of the fingerprint or the impression of the finger of the user or the device is compared or matched with the template or the model of minutiae of the fingerprint or the impression of the finger of the user or the device that is stored or registered in the system or the service, and that is used or compared for the verification or the authentication. If the template or the model of minutiae of the fingerprint or the impression of the finger of the user or the device that is captured or scanned by the fingerprint scanner or the sensor, and the template or the model of minutiae of the fingerprint or the impression of the finger of the user or the device that is stored or registered in the system or the service, are similar or identical, or within a certain threshold or tolerance, then the identity or the authenticity of the user or the device is verified or authenticated, and the access or the login to the system or the service is granted or allowed. If the template or the model of minutiae of the fingerprint or the impression of the finger of the user or the device that is captured or scanned by the fingerprint scanner or the sensor, and the template or the model of minutiae of the fingerprint or the impression of the finger of the user or the device that is stored or registered in the system or the service, are different or dissimilar, or beyond a certain threshold or tolerance, then the identity or the authenticity of the user or the device is not verified or authenticated, and the access or the login to the system or the service is denied or rejected.

References: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 5, page 149; Official (ISC)2 CISSP CBK Reference, Fifth Edition, Chapter 5, page 214

Data remanence refers to which of the following?

The remaining photons left in a fiber optic cable after a secure transmission.

The retention period required by law or regulation.

The magnetic flux created when removing the network connection from a server or personal computer.

The residual information left on magnetic storage media after a deletion or erasure.

Data remanence refers to the residual information left on magnetic storage media after a deletion or erasure. Data remanence is a security risk, as it may allow unauthorized or malicious parties to recover the deleted or erased data, which may contain sensitive or confidential information. Data remanence can be caused by the physical properties of the magnetic storage media, such as hard disks, floppy disks, or tapes, which may retain some traces of the data even after it is overwritten or formatted. Data remanence can also be caused by the logical properties of the file systems or operating systems, which may not delete or erase the data completely, but only mark the space as available or remove the pointers to the data. Data remanence can be prevented or reduced by using secure deletion or erasure methods, such as cryptographic wiping, degaussing, or physical destruction56 References: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 7: Security Operations, p. 443; Official (ISC)2 CISSP CBK Reference, Fifth Edition, Domain 7: Security Operations, p. 855.

Which of the following is TRUE about Disaster Recovery Plan (DRP) testing?

Operational networks are usually shut down during testing.

Testing should continue even if components of the test fail.

The company is fully prepared for a disaster if all tests pass.

Testing should not be done until the entire disaster plan can be tested.

Testing is a vital part of the Disaster Recovery Plan (DRP) process, as it validates the effectiveness and feasibility of the plan, identifies gaps and weaknesses, and provides opportunities for improvement and training. Testing should continue even if components of the test fail, as this will help to evaluate the impact of the failure, the root cause of the problem, and the possible solutions or alternatives34. References: 3: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 10, page 10354: CISSP For Dummies, 7th Edition, Chapter 10, page 351.

Which of the following roles has the obligation to ensure that a third party provider is capable of processing and handling data in a secure manner and meeting the standards set by the organization?

Data Custodian

Data Owner

Data Creator

Data User

The role that has the obligation to ensure that a third party provider is capable of processing and handling data in a secure manner and meeting the standards set by the organization is the data owner. A data owner is a person or an entity that has the authority or the responsibility for the data or the information within an organization, and that determines or defines the classification, the usage, the protection, or the retention of the data or the information. A data owner has the obligation to ensure that a third party provider is capable of processing and handling data in a secure manner and meeting the standards set by the organization, as the data owner is ultimately accountable or liable for the security or the quality of the data or the information, regardless of who processes or handles the data or the information. A data owner can ensure that a third party provider is capable of processing and handling data in a secure manner and meeting the standards set by the organization, by performing the tasks or the functions such as conducting due diligence, establishing service level agreements, defining security requirements, monitoring performance, or auditing compliance. References: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 2, page 61; Official (ISC)2 CISSP CBK Reference, Fifth Edition, Chapter 2, page 67

Which of the following activities BEST identifies operational problems, security misconfigurations, and malicious attacks?

Policy documentation review

Authentication validation

Periodic log reviews

Interface testing

The activity that best identifies operational problems, security misconfigurations, and malicious attacks is periodic log reviews. Log reviews are the process of examining and analyzing the records of events or activities that occur on a system or network, such as user actions, system errors, security alerts, or network traffic. Periodic log reviews can help to identify operational problems, such as system failures, performance issues, or configuration errors, by detecting anomalies, trends, or patterns in the log data. Periodic log reviews can also help to identify security misconfigurations, such as weak passwords, open ports, or missing patches, by comparing the log data with the security policies, standards, or baselines. Periodic log reviews can also help to identify malicious attacks, such as unauthorized access, data breaches, or denial of service, by recognizing signs of intrusion, compromise, or exploitation in the log data. The other options are not the best activities to identify operational problems, security misconfigurations, and malicious attacks, but rather different types of activities. Policy documentation review is the process of examining and evaluating the documents that define the rules and guidelines for the system or network security, such as policies, procedures, or standards. Policy documentation review can help to ensure the completeness, consistency, and compliance of the security documents, but not to identify the actual problems or attacks. Authentication validation is the process of verifying and confirming the identity and credentials of a user or device that requests access to a system or network, such as passwords, tokens, or certificates. Authentication validation can help to prevent unauthorized access, but not to identify the existing problems or attacks. Interface testing is the process of checking and evaluating the functionality, usability, and reliability of the interfaces between different components or systems, such as modules, applications, or networks. Interface testing can help to ensure the compatibility, interoperability, and integration of the interfaces, but not to identify the problems or attacks. References: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 7, p. 377; Official (ISC)2 CISSP CBK Reference, Fifth Edition, Chapter 7, p. 405.

The application of which of the following standards would BEST reduce the potential for data breaches?

ISO 9000

ISO 20121

ISO 26000

ISO 27001

The standard that would best reduce the potential for data breaches is ISO 27001. ISO 27001 is an international standard that specifies the requirements and the guidelines for establishing, implementing, maintaining, and improving an information security management system (ISMS) within an organization. An ISMS is a systematic approach to managing the information security of the organization, by applying the principles of plan-do-check-act (PDCA) cycle, and by following the best practices of risk assessment, risk treatment, security controls, monitoring, review, and improvement. ISO 27001 can help reduce the potential for data breaches, as it can provide a framework and a methodology for the organization to identify, protect, detect, respond, and recover from the information security incidents or events that could compromise the confidentiality, integrity, or availability of the data or the information. References: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 1, page 25; Official (ISC)2 CISSP CBK Reference, Fifth Edition, Chapter 1, page 33

Which of the following is BEST suited for exchanging authentication and authorization messages in a multi-party decentralized environment?

Lightweight Directory Access Protocol (LDAP)

Security Assertion Markup Language (SAML)

Internet Mail Access Protocol

Transport Layer Security (TLS)

Security Assertion Markup Language (SAML) is best suited for exchanging authentication and authorization messages in a multi-party decentralized environment. SAML is an XML-based standard that enables single sign-on (SSO) and federated identity management (FIM) between different domains and organizations. SAML allows a user to authenticate once at an identity provider (IdP) and access multiple service providers (SPs) without re-authenticating, by using assertions that contain information about the user’s identity, attributes, and privileges. SAML also allows SPs to request and receive authorization decisions from the IdP, based on the user’s access rights and policies. SAML is designed to support a decentralized and distributed environment, where multiple parties can exchange and verify the user’s identity and authorization information in a secure and interoperable manner. Lightweight Directory Access Protocol (LDAP) is not best suited for exchanging authentication and authorization messages in a multi-party decentralized environment, as it is a protocol that enables access and management of directory services, such as Active Directory or OpenLDAP. LDAP is used to store and retrieve information about users, groups, devices, and other objects in a hierarchical and structured manner, but it does not provide a mechanism for SSO or FIM across different domains and organizations. Internet Mail Access Protocol is not best suited for exchanging authentication and authorization messages in a multi-party decentralized environment, as it is a protocol that enables access and management of email messages stored on a remote server. IMAP is used to retrieve and manipulate email messages from multiple devices and clients, but it does not provide a mechanism for SSO or FIM across different domains and organizations. Transport Layer Security (TLS) is not best suited for exchanging authentication and authorization messages in a multi-party decentralized environment, as it is a protocol that provides security and encryption for data transmission over a network, such as the internet. TLS is used to establish a secure and authenticated channel between two parties, such as a web browser and a web server, but it does not provide a mechanism for SSO or FIM across different domains and organizations.

When is a Business Continuity Plan (BCP) considered to be valid?

When it has been validated by the Business Continuity (BC) manager

When it has been validated by the board of directors

When it has been validated by all threat scenarios

When it has been validated by realistic exercises

A Business Continuity Plan (BCP) is considered to be valid when it has been validated by realistic exercises. A BCP is a part of a BCP/DRP that focuses on ensuring the continuous operation of the organization’s critical business functions and processes during and after a disruption or disaster. A BCP should include various components, such as:

A BCP is considered to be valid when it has been validated by realistic exercises, because it can ensure that the BCP is practical and applicable, and that it can achieve the desired outcomes and objectives in a real-life scenario. Realistic exercises are a type of testing, training, and exercises that involve performing and practicing the BCP with the relevant stakeholders, using simulated or hypothetical scenarios, such as a fire drill, a power outage, or a cyberattack. Realistic exercises can provide several benefits, such as:

The other options are not the criteria for considering a BCP to be valid, but rather the steps or parties that are involved in developing or approving a BCP. When it has been validated by the Business Continuity (BC) manager is not a criterion for considering a BCP to be valid, but rather a step that is involved in developing a BCP. The BC manager is the person who is responsible for overseeing and coordinating the BCP activities and processes, such as the business impact analysis, the recovery strategies, the BCP document, the testing, training, and exercises, and the maintenance and review. The BC manager can validate the BCP by reviewing and verifying the BCP components and outcomes, and ensuring that they meet the BCP standards and objectives. However, the validation by the BC manager is not enough to consider the BCP to be valid, as it does not test or demonstrate the BCP in a realistic scenario. When it has been validated by the board of directors is not a criterion for considering a BCP to be valid, but rather a party that is involved in approving a BCP. The board of directors is the group of people who are elected by the shareholders to represent their interests and to oversee the strategic direction and governance of the organization. The board of directors can approve the BCP by endorsing and supporting the BCP components and outcomes, and allocating the necessary resources and funds for the BCP. However, the approval by the board of directors is not enough to consider the BCP to be valid, as it does not test or demonstrate the BCP in a realistic scenario. When it has been validated by all threat scenarios is not a criterion for considering a BCP to be valid, but rather an unrealistic or impossible expectation for validating a BCP. A threat scenario is a description or a simulation of a possible or potential disruption or disaster that might affect the organization’s critical business functions and processes, such as a natural hazard, a human error, or a technical failure. A threat scenario can be used to test and validate the BCP by measuring and evaluating the BCP’s performance and effectiveness in responding and recovering from the disruption or disaster. However, it is not possible or feasible to validate the BCP by all threat scenarios, as there are too many or unknown threat scenarios that might occur, and some threat scenarios might be too severe or complex to simulate or test. Therefore, the BCP should be validated by the most likely or relevant threat scenarios, and not by all threat scenarios.

Which security service is served by the process of encryption plaintext with the sender’s private key and decrypting cipher text with the sender’s public key?

Confidentiality

Integrity

Identification

Availability

The security service that is served by the process of encrypting plaintext with the sender’s private key and decrypting ciphertext with the sender’s public key is identification. Identification is the process of verifying the identity of a person or entity that claims to be who or what it is. Identification can be achieved by using public key cryptography and digital signatures, which are based on the process of encrypting plaintext with the sender’s private key and decrypting ciphertext with the sender’s public key. This process works as follows:

The process of encrypting plaintext with the sender’s private key and decrypting ciphertext with the sender’s public key serves identification because it ensures that only the sender can produce a valid ciphertext that can be decrypted by the receiver, and that the receiver can verify the sender’s identity by using the sender’s public key. This process also provides non-repudiation, which means that the sender cannot deny sending the message or the receiver cannot deny receiving the message, as the ciphertext serves as a proof of origin and delivery.

The other options are not the security services that are served by the process of encrypting plaintext with the sender’s private key and decrypting ciphertext with the sender’s public key. Confidentiality is the process of ensuring that the message is only readable by the intended parties, and it is achieved by encrypting plaintext with the receiver’s public key and decrypting ciphertext with the receiver’s private key. Integrity is the process of ensuring that the message is not modified or corrupted during transmission, and it is achieved by using hash functions and message authentication codes. Availability is the process of ensuring that the message is accessible and usable by the authorized parties, and it is achieved by using redundancy, backup, and recovery mechanisms.

Who in the organization is accountable for classification of data information assets?

Data owner

Data architect

Chief Information Security Officer (CISO)

Chief Information Officer (CIO)

The person in the organization who is accountable for the classification of data information assets is the data owner. The data owner is the person or entity that has the authority and responsibility for the creation, collection, processing, and disposal of a set of data. The data owner is also responsible for defining the purpose, value, and classification of the data, as well as the security requirements and controls for the data. The data owner should be able to determine the impact of the data on the mission of the organization, which means assessing the potential consequences of losing, compromising, or disclosing the data. The impact of the data on the mission of the organization is one of the main criteria for data classification, which helps to establish the appropriate level of protection and handling for the data. The data owner should also ensure that the data is properly labeled, stored, accessed, shared, and destroyed according to the data classification policy and procedures.

The other options are not the persons in the organization who are accountable for the classification of data information assets, but rather persons who have other roles or functions related to data management. The data architect is the person or entity that designs and models the structure, format, and relationships of the data, as well as the data standards, specifications, and lifecycle. The data architect supports the data owner by providing technical guidance and expertise on the data architecture and quality. The Chief Information Security Officer (CISO) is the person or entity that oversees the security strategy, policies, and programs of the organization, as well as the security performance and incidents. The CISO supports the data owner by providing security leadership and governance, as well as ensuring the compliance and alignment of the data security with the organizational objectives and regulations. The Chief Information Officer (CIO) is the person or entity that manages the information technology (IT) resources and services of the organization, as well as the IT strategy and innovation. The CIO supports the data owner by providing IT management and direction, as well as ensuring the availability, reliability, and scalability of the IT infrastructure and applications.

Which of the following mobile code security models relies only on trust?

Code signing

Class authentication

Sandboxing

Type safety

Code signing is the mobile code security model that relies only on trust. Mobile code is a type of software that can be transferred from one system to another and executed without installation or compilation. Mobile code can be used for various purposes, such as web applications, applets, scripts, macros, etc. Mobile code can also pose various security risks, such as malicious code, unauthorized access, data leakage, etc. Mobile code security models are the techniques that are used to protect the systems and users from the threats of mobile code. Code signing is a mobile code security model that relies only on trust, which means that the security of the mobile code depends on the reputation and credibility of the code provider. Code signing works as follows:

Code signing relies only on trust because it does not enforce any security restrictions or controls on the mobile code, but rather leaves the decision to the code consumer. Code signing also does not guarantee the quality or functionality of the mobile code, but rather the authenticity and integrity of the code provider. Code signing can be effective if the code consumer knows and trusts the code provider, and if the code provider follows the security standards and best practices. However, code signing can also be ineffective if the code consumer is unaware or careless of the code provider, or if the code provider is compromised or malicious.

The other options are not mobile code security models that rely only on trust, but rather on other techniques that limit or isolate the mobile code. Class authentication is a mobile code security model that verifies the permissions and capabilities of the mobile code based on its class or type, and allows or denies the execution of the mobile code accordingly. Sandboxing is a mobile code security model that executes the mobile code in a separate and restricted environment, and prevents the mobile code from accessing or affecting the system resources or data. Type safety is a mobile code security model that checks the validity and consistency of the mobile code, and prevents the mobile code from performing illegal or unsafe operations.

The use of private and public encryption keys is fundamental in the implementation of which of the following?

Diffie-Hellman algorithm

Secure Sockets Layer (SSL)

Advanced Encryption Standard (AES)

Message Digest 5 (MD5)

The use of private and public encryption keys is fundamental in the implementation of Secure Sockets Layer (SSL). SSL is a protocol that provides secure communication over the Internet by using public key cryptography and digital certificates. SSL works as follows:

The use of private and public encryption keys is fundamental in the implementation of SSL because it enables the authentication of the parties, the establishment of the shared secret key, and the protection of the data from eavesdropping, tampering, and replay attacks.

The other options are not protocols or algorithms that use private and public encryption keys in their implementation. Diffie-Hellman algorithm is a method for generating a shared secret key between two parties, but it does not use private and public encryption keys, but rather public and private parameters. Advanced Encryption Standard (AES) is a symmetric encryption algorithm that uses the same key for encryption and decryption, but it does not use private and public encryption keys, but rather a single secret key. Message Digest 5 (MD5) is a hash function that produces a fixed-length output from a variable-length input, but it does not use private and public encryption keys, but rather a one-way mathematical function.

Which of the following is a PRIMARY advantage of using a third-party identity service?

Consolidation of multiple providers

Directory synchronization

Web based logon

Automated account management

Consolidation of multiple providers is the primary advantage of using a third-party identity service. A third-party identity service is a service that provides identity and access management (IAM) functions, such as authentication, authorization, and federation, for multiple applications or systems, using a single identity provider (IdP). A third-party identity service can offer various benefits, such as:

Consolidation of multiple providers is the primary advantage of using a third-party identity service, because it can simplify and streamline the IAM architecture and processes, by reducing the number of IdPs and IAM systems that are involved in managing the identities and access for multiple applications or systems. Consolidation of multiple providers can also help to avoid the issues or risks that might arise from having multiple IdPs and IAM systems, such as the inconsistency, redundancy, or conflict of the IAM policies and controls, or the inefficiency, vulnerability, or disruption of the IAM functions.

The other options are not the primary advantages of using a third-party identity service, but rather secondary or specific advantages for different aspects or scenarios of using a third-party identity service. Directory synchronization is an advantage of using a third-party identity service, but it is more relevant for the scenario where the organization has an existing directory service, such as LDAP or Active Directory, that stores and manages the user accounts and attributes, and wants to synchronize them with the third-party identity service, to enable the SSO or federation for the users. Web based logon is an advantage of using a third-party identity service, but it is more relevant for the aspect where the third-party identity service uses a web-based protocol, such as SAML or OAuth, to facilitate the SSO or federation for the users, by redirecting them to a web-based logon page, where they can enter their credentials or consent. Automated account management is an advantage of using a third-party identity service, but it is more relevant for the aspect where the third-party identity service provides the IAM functions, such as provisioning, deprovisioning, or updating, for the user accounts and access rights, using an automated or self-service mechanism, such as SCIM or JIT.

Which of the following types of business continuity tests includes assessment of resilience to internal and external risks without endangering live operations?

Walkthrough

Simulation

Parallel

White box

Simulation is the type of business continuity test that includes assessment of resilience to internal and external risks without endangering live operations. Business continuity is the ability of an organization to maintain or resume its critical functions and operations in the event of a disruption or disaster. Business continuity testing is the process of evaluating and validating the effectiveness and readiness of the business continuity plan (BCP) and the disaster recovery plan (DRP) through various methods and scenarios. Business continuity testing can provide several benefits, such as:

There are different types of business continuity tests, depending on the scope, purpose, and complexity of the test. Some of the common types are:

Simulation is the type of business continuity test that includes assessment of resilience to internal and external risks without endangering live operations, because it can simulate various types of risks, such as natural, human, or technical, and assess how the organization and its systems can cope and recover from them, without actually causing any harm or disruption to the live operations. Simulation can also help to identify and mitigate any potential risks that might affect the live operations, and to improve the resilience and preparedness of the organization and its systems.

The other options are not the types of business continuity tests that include assessment of resilience to internal and external risks without endangering live operations, but rather types that have other objectives or effects. Walkthrough is a type of business continuity test that does not include assessment of resilience to internal and external risks, but rather a review and discussion of the BCP and DRP, without any actual testing or practice. Parallel is a type of business continuity test that does not endanger live operations, but rather maintains them, while activating and operating the alternate site or system. Full interruption is a type of business continuity test that does endanger live operations, by shutting them down and transferring them to the alternate site or system.

A continuous information security-monitoring program can BEST reduce risk through which of the following?

Collecting security events and correlating them to identify anomalies

Facilitating system-wide visibility into the activities of critical user accounts

Encompassing people, process, and technology

Logging both scheduled and unscheduled system changes

A continuous information security monitoring program can best reduce risk through encompassing people, process, and technology. A continuous information security monitoring program is a process that involves maintaining the ongoing awareness of the security status, events, and activities of a system or network, by collecting, analyzing, and reporting the security data and information, using various methods and tools. A continuous information security monitoring program can provide several benefits, such as:

A continuous information security monitoring program can best reduce risk through encompassing people, process, and technology, because it can ensure that the continuous information security monitoring program is holistic and comprehensive, and that it covers all the aspects and elements of the system or network security. People, process, and technology are the three pillars of a continuous information security monitoring program, and they represent the following:

The other options are not the best ways to reduce risk through a continuous information security monitoring program, but rather specific or partial ways that can contribute to the risk reduction. Collecting security events and correlating them to identify anomalies is a specific way to reduce risk through a continuous information security monitoring program, but it is not the best way, because it only focuses on one aspect of the security data and information, and it does not address the other aspects, such as the security objectives and requirements, the security controls and measures, and the security feedback and improvement. Facilitating system-wide visibility into the activities of critical user accounts is a partial way to reduce risk through a continuous information security monitoring program, but it is not the best way, because it only covers one element of the system or network security, and it does not cover the other elements, such as the security threats and vulnerabilities, the security incidents and impacts, and the security response and remediation. Logging both scheduled and unscheduled system changes is a specific way to reduce risk through a continuous information security monitoring program, but it is not the best way, because it only focuses on one type of the security events and activities, and it does not focus on the other types, such as the security alerts and notifications, the security analysis and correlation, and the security reporting and documentation.

What would be the MOST cost effective solution for a Disaster Recovery (DR) site given that the organization’s systems cannot be unavailable for more than 24 hours?

Warm site

Hot site

Mirror site

Cold site

A warm site is the most cost effective solution for a disaster recovery (DR) site given that the organization’s systems cannot be unavailable for more than 24 hours. A DR site is a backup facility that can be used to restore the normal operation of the organization’s IT systems and infrastructure after a disruption or disaster. A DR site can have different levels of readiness and functionality, depending on the organization’s recovery objectives and budget. The main types of DR sites are:

A warm site is the most cost effective solution for a disaster recovery (DR) site given that the organization’s systems cannot be unavailable for more than 24 hours, because it can provide a balance between the recovery time and the recovery cost. A warm site can enable the organization to resume its critical functions and operations within a reasonable time frame, without spending too much on the DR site maintenance and operation. A warm site can also provide some flexibility and scalability for the organization to adjust its recovery strategies and resources according to its needs and priorities.

The other options are not the most cost effective solutions for a disaster recovery (DR) site given that the organization’s systems cannot be unavailable for more than 24 hours, but rather solutions that are either too costly or too slow for the organization’s recovery objectives and budget. A hot site is a solution that is too costly for a disaster recovery (DR) site given that the organization’s systems cannot be unavailable for more than 24 hours, because it requires the organization to invest a lot of money on the DR site equipment, software, and services, and to pay for the ongoing operational and maintenance costs. A hot site may be more suitable for the organization’s systems that cannot be unavailable for more than a few hours or minutes, or that have very high availability and performance requirements. A mirror site is a solution that is too costly for a disaster recovery (DR) site given that the organization’s systems cannot be unavailable for more than 24 hours, because it requires the organization to duplicate its entire primary site, with the same hardware, software, data, and applications, and to keep them online and synchronized at all times. A mirror site may be more suitable for the organization’s systems that cannot afford any downtime or data loss, or that have very strict compliance and regulatory requirements. A cold site is a solution that is too slow for a disaster recovery (DR) site given that the organization’s systems cannot be unavailable for more than 24 hours, because it requires the organization to spend a lot of time and effort on the DR site installation, configuration, and restoration, and to rely on other sources of backup data and applications. A cold site may be more suitable for the organization’s systems that can be unavailable for more than a few days or weeks, or that have very low criticality and priority.

What is the PRIMARY reason for implementing change management?

Certify and approve releases to the environment

Provide version rollbacks for system changes

Ensure that all applications are approved

Ensure accountability for changes to the environment

Ensuring accountability for changes to the environment is the primary reason for implementing change management. Change management is a process that ensures that any changes to the system or network environment, such as the hardware, software, configuration, or documentation, are planned, approved, implemented, and documented in a controlled and consistent manner. Change management can provide several benefits, such as:

Ensuring accountability for changes to the environment is the primary reason for implementing change management, because it can ensure that the changes are authorized, justified, and traceable, and that the parties involved in the changes are responsible and accountable for their actions and results. Accountability can also help to deter or detect any unauthorized or malicious changes that might compromise the system or network environment.

The other options are not the primary reasons for implementing change management, but rather secondary or specific reasons for different aspects or phases of change management. Certifying and approving releases to the environment is a reason for implementing change management, but it is more relevant for the approval phase of change management, which is the phase that involves reviewing and validating the changes and their impacts, and granting or denying the permission to proceed with the changes. Providing version rollbacks for system changes is a reason for implementing change management, but it is more relevant for the implementation phase of change management, which is the phase that involves executing and monitoring the changes and their effects, and providing the backup and recovery options for the changes. Ensuring that all applications are approved is a reason for implementing change management, but it is more relevant for the application changes, which are the changes that affect the software components or services that provide the functionality or logic of the system or network environment.

What should be the FIRST action to protect the chain of evidence when a desktop computer is involved?

Take the computer to a forensic lab

Make a copy of the hard drive

Start documenting

Turn off the computer

Making a copy of the hard drive should be the first action to protect the chain of evidence when a desktop computer is involved. A chain of evidence, also known as a chain of custody, is a process that documents and preserves the integrity and authenticity of the evidence collected from a crime scene, such as a desktop computer. A chain of evidence should include information such as:

Making a copy of the hard drive should be the first action to protect the chain of evidence when a desktop computer is involved, because it can ensure that the original hard drive is not altered, damaged, or destroyed during the forensic analysis, and that the copy can be used as a reliable and admissible source of evidence. Making a copy of the hard drive should also involve using a write blocker, which is a device or a software that prevents any modification or deletion of the data on the hard drive, and generating a hash value, which is a unique and fixed identifier that can verify the integrity and consistency of the data on the hard drive.

The other options are not the first actions to protect the chain of evidence when a desktop computer is involved, but rather actions that should be done after or along with making a copy of the hard drive. Taking the computer to a forensic lab is an action that should be done after making a copy of the hard drive, because it can ensure that the computer is transported and stored in a secure and controlled environment, and that the forensic analysis is conducted by qualified and authorized personnel. Starting documenting is an action that should be done along with making a copy of the hard drive, because it can ensure that the chain of evidence is maintained and recorded throughout the forensic process, and that the evidence can be traced and verified. Turning off the computer is an action that should be done after making a copy of the hard drive, because it can ensure that the computer is powered down and disconnected from any network or device, and that the computer is protected from any further damage or tampering.

A Business Continuity Plan/Disaster Recovery Plan (BCP/DRP) will provide which of the following?

Guaranteed recovery of all business functions

Minimization of the need decision making during a crisis

Insurance against litigation following a disaster

Protection from loss of organization resources

Minimization of the need for decision making during a crisis is the main benefit that a Business Continuity Plan/Disaster Recovery Plan (BCP/DRP) will provide. A BCP/DRP is a set of policies, procedures, and resources that enable an organization to continue or resume its critical functions and operations in the event of a disruption or disaster. A BCP/DRP can provide several benefits, such as:

Minimization of the need for decision making during a crisis is the main benefit that a BCP/DRP will provide, because it can ensure that the organization and its staff have a clear and consistent guidance and direction on how to respond and act during a disruption or disaster, and avoid any confusion, uncertainty, or inconsistency that might worsen the situation or impact. A BCP/DRP can also help to reduce the stress and pressure on the organization and its staff during a crisis, and increase their confidence and competence in executing the plans.

The other options are not the benefits that a BCP/DRP will provide, but rather unrealistic or incorrect expectations or outcomes of a BCP/DRP. Guaranteed recovery of all business functions is not a benefit that a BCP/DRP will provide, because it is not possible or feasible to recover all business functions after a disruption or disaster, especially if the disruption or disaster is severe or prolonged. A BCP/DRP can only prioritize and recover the most critical or essential business functions, and may have to suspend or terminate the less critical or non-essential business functions. Insurance against litigation following a disaster is not a benefit that a BCP/DRP will provide, because it is not a guarantee or protection that the organization will not face any legal or regulatory consequences or liabilities after a disruption or disaster, especially if the disruption or disaster is caused by the organization’s negligence or misconduct. A BCP/DRP can only help to mitigate or reduce the legal or regulatory risks, and may have to comply with or report to the relevant authorities or parties. Protection from loss of organization resources is not a benefit that a BCP/DRP will provide, because it is not a prevention or avoidance of any damage or destruction of the organization’s assets or resources during a disruption or disaster, especially if the disruption or disaster is physical or natural. A BCP/DRP can only help to restore or replace the lost or damaged assets or resources, and may have to incur some costs or losses.

An organization is found lacking the ability to properly establish performance indicators for its Web hosting solution during an audit. What would be the MOST probable cause?

Absence of a Business Intelligence (BI) solution

Inadequate cost modeling

Improper deployment of the Service-Oriented Architecture (SOA)

Insufficient Service Level Agreement (SLA)

Insufficient Service Level Agreement (SLA) would be the most probable cause for an organization to lack the ability to properly establish performance indicators for its Web hosting solution during an audit. A Web hosting solution is a service that provides the infrastructure, resources, and tools for hosting and maintaining a website or a web application on the internet. A Web hosting solution can offer various benefits, such as:

A Service Level Agreement (SLA) is a contract or an agreement that defines the expectations, responsibilities, and obligations of the parties involved in a service, such as the service provider and the service consumer. An SLA can include various components, such as:

Insufficient SLA would be the most probable cause for an organization to lack the ability to properly establish performance indicators for its Web hosting solution during an audit, because it could mean that the SLA does not include or specify the appropriate service level indicators or objectives for the Web hosting solution, or that the SLA does not provide or enforce the adequate service level reporting or penalties for the Web hosting solution. This could affect the ability of the organization to measure and assess the Web hosting solution quality, performance, and availability, and to identify and address any issues or risks in the Web hosting solution.

The other options are not the most probable causes for an organization to lack the ability to properly establish performance indicators for its Web hosting solution during an audit, but rather the factors that could affect or improve the Web hosting solution in other ways. Absence of a Business Intelligence (BI) solution is a factor that could affect the ability of the organization to analyze and utilize the data and information from the Web hosting solution, such as the web traffic, behavior, or conversion. A BI solution is a system that involves the collection, integration, processing, and presentation of the data and information from various sources, such as the Web hosting solution, to support the decision making and planning of the organization. However, absence of a BI solution is not the most probable cause for an organization to lack the ability to properly establish performance indicators for its Web hosting solution during an audit, because it does not affect the definition or specification of the performance indicators for the Web hosting solution, but rather the analysis or usage of the performance indicators for the Web hosting solution. Inadequate cost modeling is a factor that could affect the ability of the organization to estimate and optimize the cost and value of the Web hosting solution, such as the web hosting fees, maintenance costs, or return on investment. A cost model is a tool or a method that helps the organization to calculate and compare the cost and value of the Web hosting solution, and to identify and implement the best or most efficient Web hosting solution. However, inadequate cost modeling is not the most probable cause for an organization to lack the ability to properly establish performance indicators for its Web hosting solution during an audit, because it does not affect the definition or specification of the performance indicators for the Web hosting solution, but rather the estimation or optimization of the cost and value of the Web hosting solution. Improper deployment of the Service-Oriented Architecture (SOA) is a factor that could affect the ability of the organization to design and develop the Web hosting solution, such as the web services, components, or interfaces. A SOA is a software architecture that involves the modularization, standardization, and integration of the software components or services that provide the functionality or logic of the Web hosting solution. A SOA can offer various benefits, such as:

However, improper deployment of the SOA is not the most probable cause for an organization to lack the ability to properly establish performance indicators for its Web hosting solution during an audit, because it does not affect the definition or specification of the performance indicators for the Web hosting solution, but rather the design or development of the Web hosting solution.

With what frequency should monitoring of a control occur when implementing Information Security Continuous Monitoring (ISCM) solutions?

Continuously without exception for all security controls

Before and after each change of the control

At a rate concurrent with the volatility of the security control

Only during system implementation and decommissioning

Monitoring of a control should occur at a rate concurrent with the volatility of the security control when implementing Information Security Continuous Monitoring (ISCM) solutions. ISCM is a process that involves maintaining the ongoing awareness of the security status, events, and activities of a system or network, by collecting, analyzing, and reporting the security data and information, using various methods and tools. ISCM can provide several benefits, such as:

A security control is a measure or mechanism that is implemented to protect the system or network from the security threats or risks, by preventing, detecting, or correcting the security incidents or impacts. A security control can have various types, such as administrative, technical, or physical, and various attributes, such as preventive, detective, or corrective. A security control can also have different levels of volatility, which is the degree or frequency of change or variation of the security control, due to various factors, such as the security requirements, the threat landscape, or the system or network environment.

Monitoring of a control should occur at a rate concurrent with the volatility of the security control when implementing ISCM solutions, because it can ensure that the ISCM solutions can capture and reflect the current and accurate state and performance of the security control, and can identify and report any issues or risks that might affect the security control. Monitoring of a control at a rate concurrent with the volatility of the security control can also help to optimize the ISCM resources and efforts, by allocating them according to the priority and urgency of the security control.

The other options are not the correct frequencies for monitoring of a control when implementing ISCM solutions, but rather incorrect or unrealistic frequencies that might cause problems or inefficiencies for the ISCM solutions. Continuously without exception for all security controls is an incorrect frequency for monitoring of a control when implementing ISCM solutions, because it is not feasible or necessary to monitor all security controls at the same and constant rate, regardless of their volatility or importance. Continuously monitoring all security controls without exception might cause the ISCM solutions to consume excessive or wasteful resources and efforts, and might overwhelm or overload the ISCM solutions with too much or irrelevant data and information. Before and after each change of the control is an incorrect frequency for monitoring of a control when implementing ISCM solutions, because it is not sufficient or timely to monitor the security control only when there is a change of the security control, and not during the normal operation of the security control. Monitoring the security control only before and after each change might cause the ISCM solutions to miss or ignore the security status, events, and activities that occur between the changes of the security control, and might delay or hinder the ISCM solutions from detecting and responding to the security issues or incidents that affect the security control. Only during system implementation and decommissioning is an incorrect frequency for monitoring of a control when implementing ISCM solutions, because it is not appropriate or effective to monitor the security control only during the initial or final stages of the system or network lifecycle, and not during the operational or maintenance stages of the system or network lifecycle. Monitoring the security control only during system implementation and decommissioning might cause the ISCM solutions to neglect or overlook the security status, events, and activities that occur during the regular or ongoing operation of the system or network, and might prevent or limit the ISCM solutions from improving and optimizing the security control.

Recovery strategies of a Disaster Recovery planning (DRIP) MUST be aligned with which of the following?

Hardware and software compatibility issues

Applications’ critically and downtime tolerance

Budget constraints and requirements

Cost/benefit analysis and business objectives

Recovery strategies of a Disaster Recovery planning (DRP) must be aligned with the cost/benefit analysis and business objectives. A DRP is a part of a BCP/DRP that focuses on restoring the normal operation of the organization’s IT systems and infrastructure after a disruption or disaster. A DRP should include various components, such as:

Recovery strategies of a DRP must be aligned with the cost/benefit analysis and business objectives, because it can ensure that the DRP is feasible and suitable, and that it can achieve the desired outcomes and objectives in a cost-effective and efficient manner. A cost/benefit analysis is a technique that compares the costs and benefits of different recovery strategies, and determines the optimal one that provides the best value for money. A business objective is a goal or a target that the organization wants to achieve through its IT systems and infrastructure, such as increasing the productivity, profitability, or customer satisfaction. A recovery strategy that is aligned with the cost/benefit analysis and business objectives can help to:

The other options are not the factors that the recovery strategies of a DRP must be aligned with, but rather factors that should be considered or addressed when developing or implementing the recovery strategies of a DRP. Hardware and software compatibility issues are factors that should be considered when developing the recovery strategies of a DRP, because they can affect the functionality and interoperability of the IT systems and infrastructure, and may require additional resources or adjustments to resolve them. Applications’ criticality and downtime tolerance are factors that should be addressed when implementing the recovery strategies of a DRP, because they can determine the priority and urgency of the recovery for different applications, and may require different levels of recovery objectives and resources. Budget constraints and requirements are factors that should be considered when developing the recovery strategies of a DRP, because they can limit the availability and affordability of the IT resources and funds for the recovery, and may require trade-offs or compromises to balance them.

What is the MOST important step during forensic analysis when trying to learn the purpose of an unknown application?

Disable all unnecessary services

Ensure chain of custody

Prepare another backup of the system

Isolate the system from the network

Isolating the system from the network is the most important step during forensic analysis when trying to learn the purpose of an unknown application. An unknown application is an application that is not recognized or authorized by the system or network administrator, and that may have been installed or executed without the user’s knowledge or consent. An unknown application may have various purposes, such as:

Forensic analysis is a process that involves examining and investigating the system or network for any evidence or traces of the unknown application, such as its origin, nature, behavior, and impact. Forensic analysis can provide several benefits, such as:

Isolating the system from the network is the most important step during forensic analysis when trying to learn the purpose of an unknown application, because it can ensure that the system is isolated and protected from any external or internal influences or interferences, and that the forensic analysis is conducted in a safe and controlled environment. Isolating the system from the network can also help to:

The other options are not the most important steps during forensic analysis when trying to learn the purpose of an unknown application, but rather steps that should be done after or along with isolating the system from the network. Disabling all unnecessary services is a step that should be done after isolating the system from the network, because it can ensure that the system is optimized and simplified for the forensic analysis, and that the system resources and functions are not consumed or affected by any irrelevant or redundant services. Ensuring chain of custody is a step that should be done along with isolating the system from the network, because it can ensure that the integrity and authenticity of the evidence are maintained and documented throughout the forensic process, and that the evidence can be traced and verified. Preparing another backup of the system is a step that should be done after isolating the system from the network, because it can ensure that the system data and configuration are preserved and replicated for the forensic analysis, and that the system can be restored and recovered in case of any damage or loss.

Which of the following does Temporal Key Integrity Protocol (TKIP) support?

Multicast and broadcast messages

Coordination of IEEE 802.11 protocols

Wired Equivalent Privacy (WEP) systems

Synchronization of multiple devices

Temporal Key Integrity Protocol (TKIP) supports multicast and broadcast messages by using a group temporal key that is shared by all the devices in the same wireless network. This key is used to encrypt and decrypt the messages that are sent to multiple recipients at once. TKIP also supports unicast messages by using a pairwise temporal key that is unique for each device and session. TKIP does not support coordination of IEEE 802.11 protocols, as it is a protocol itself that was designed to replace WEP. TKIP is compatible with WEP systems, but it does not support them, as it provides more security features than WEP. TKIP does not support synchronization of multiple devices, as it does not provide any clock or time synchronization mechanism . References: 1: Temporal Key Integrity Protocol - Wikipedia 2: Wi-Fi Security: Should You Use WPA2-AES, WPA2-TKIP, or Both? - How-To Geek

Internet Protocol (IP) source address spoofing is used to defeat

address-based authentication.

Address Resolution Protocol (ARP).

Reverse Address Resolution Protocol (RARP).

Transmission Control Protocol (TCP) hijacking.

Internet Protocol (IP) source address spoofing is used to defeat address-based authentication, which is a method of verifying the identity of a user or a system based on their IP address. IP source address spoofing involves forging the IP header of a packet to make it appear as if it came from a trusted or authorized source, and bypassing the authentication check. IP source address spoofing can be used for various malicious purposes, such as denial-of-service attacks, man-in-the-middle attacks, or session hijacking34. References: 3: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 5, page 5274: CISSP For Dummies, 7th Edition, Chapter 5, page 153.

An advantage of link encryption in a communications network is that it

makes key management and distribution easier.

protects data from start to finish through the entire network.

improves the efficiency of the transmission.

encrypts all information, including headers and routing information.

An advantage of link encryption in a communications network is that it encrypts all information, including headers and routing information. Link encryption is a type of encryption that is applied at the data link layer of the OSI model, and encrypts the entire packet or frame as it travels from one node to another1. Link encryption can protect the confidentiality and integrity of the data, as well as the identity and location of the nodes. Link encryption does not make key management and distribution easier, as it requires each node to have a separate key for each link. Link encryption does not protect data from start to finish through the entire network, as it only encrypts the data while it is in transit, and decrypts it at each node. Link encryption does not improve the efficiency of the transmission, as it adds overhead and latency to the communication. References: 1: CISSP All-in-One Exam Guide, Eighth Edition, Chapter 7, page 419.

Which security action should be taken FIRST when computer personnel are terminated from their jobs?

Remove their computer access

Require them to turn in their badge

Conduct an exit interview

Reduce their physical access level to the facility

The first security action that should be taken when computer personnel are terminated from their jobs is to remove their computer access. Computer access is the ability to log in, use, or modify the computer systems, networks, or data of the organization3. Removing computer access can prevent the terminated personnel from accessing or harming the organization’s information assets, or from stealing or leaking sensitive or confidential data. Removing computer access can also reduce the risk of insider threats, such as sabotage, fraud, or espionage. Requiring them to turn in their badge, conducting an exit interview, and reducing their physical access level to the facility are also important security actions that should be taken when computer personnel are terminated from their jobs, but they are not as urgent or critical as removing their computer access. References: 3: Official (ISC)2 CISSP CBK Reference, 5th Edition, Chapter 5, page 249.

Which of the following is a security limitation of File Transfer Protocol (FTP)?

Passive FTP is not compatible with web browsers.

Anonymous access is allowed.

FTP uses Transmission Control Protocol (TCP) ports 20 and 21.

Authentication is not encrypted.

File Transfer Protocol (FTP) is a protocol that enables the transfer of files between a client and a server over a network. FTP has a security limitation in that it does not encrypt the authentication process, meaning that the username and password are sent in clear text over the network. This exposes the credentials to interception and eavesdropping by unauthorized parties, who could then access the files or compromise the system . References: : CISSP All-in-One Exam Guide, Eighth Edition, Chapter 5, page 533. : CISSP For Dummies, 7th Edition, Chapter 5, page 155.

Which of the following is the FIRST step in the incident response process?

Determine the cause of the incident

Disconnect the system involved from the network

Isolate and contain the system involved

Investigate all symptoms to confirm the incident

Investigating all symptoms to confirm the incident is the first step in the incident response process. An incident is an event that violates or threatens the security, availability, integrity, or confidentiality of the IT systems or data. An incident response is a process that involves detecting, analyzing, containing, eradicating, recovering, and learning from an incident, using various methods and tools. An incident response can provide several benefits, such as:

Investigating all symptoms to confirm the incident is the first step in the incident response process, because it can ensure that the incident is verified and validated, and that the incident response is initiated and escalated. A symptom is a sign or an indication that an incident may have occurred or is occurring, such as an alert, a log, or a report. Investigating all symptoms to confirm the incident involves collecting and analyzing the relevant data and information from various sources, such as the IT systems, the network, the users, or the external parties, and determining whether an incident has actually happened or is happening, and how serious or urgent it is. Investigating all symptoms to confirm the incident can also help to:

The other options are not the first steps in the incident response process, but rather steps that should be done after or along with investigating all symptoms to confirm the incident. Determining the cause of the incident is a step that should be done after investigating all symptoms to confirm the incident, because it can ensure that the root cause and source of the incident are identified and analyzed, and that the incident response is directed and focused. Determining the cause of the incident involves examining and testing the affected IT systems and data, and tracing and tracking the origin and path of the incident, using various techniques and tools, such as forensics, malware analysis, or reverse engineering. Determining the cause of the incident can also help to:

Disconnecting the system involved from the network is a step that should be done along with investigating all symptoms to confirm the incident, because it can ensure that the system is isolated and protected from any external or internal influences or interferences, and that the incident response is conducted in a safe and controlled environment. Disconnecting the system involved from the network can also help to:

Isolating and containing the system involved is a step that should be done after investigating all symptoms to confirm the incident, because it can ensure that the incident is confined and restricted, and that the incident response is continued and maintained. Isolating and containing the system involved involves applying and enforcing the appropriate security measures and controls to limit or stop the activity and impact of the incident on the IT systems and data, such as firewall rules, access policies, or encryption keys. Isolating and containing the system involved can also help to:

Intellectual property rights are PRIMARY concerned with which of the following?

Owner’s ability to realize financial gain

Owner’s ability to maintain copyright

Right of the owner to enjoy their creation

Right of the owner to control delivery method

Intellectual property rights are primarily concerned with the owner’s ability to realize financial gain from their creation. Intellectual property is a category of intangible assets that are the result of human creativity and innovation, such as inventions, designs, artworks, literature, music, software, etc. Intellectual property rights are the legal rights that grant the owner the exclusive control over the use, reproduction, distribution, and modification of their intellectual property. Intellectual property rights aim to protect the owner’s interests and incentives, and to reward them for their contribution to the society and economy.