Which of the following MLflow Model Registry use cases requires the use of an HTTP Webhook?

Which of the following is a simple statistic to monitor for categorical feature drift?

Which of the following operations in Feature Store Client fs can be used to return a Spark DataFrame of a data set associated with a Feature Store table?

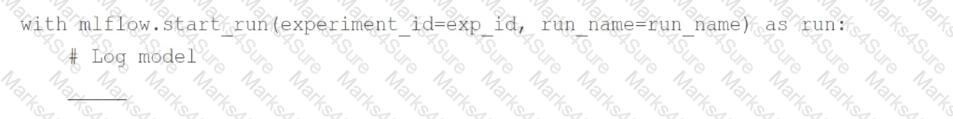

A data scientist has developed a scikit-learn modelsklearn_modeland they want to log the model using MLflow.

They write the following incomplete code block:

Which of the following lines of code can be used to fill in the blank so the code block can successfully complete the task?

A machine learning engineer wants to programmatically create a new Databricks Job whose schedule depends on the result of some automated tests in a machine learning pipeline.

Which of the following Databricks tools can be used to programmatically create the Job?

A data scientist would like to enable MLflow Autologging for all machine learning libraries used in a notebook. They want to ensure that MLflow Autologging is used no matter what version of the Databricks Runtime for Machine Learning is used to run the notebook and no matter what workspace-wide configurations are selected in the Admin Console.

Which of the following lines of code can they use to accomplish this task?

Which of the following Databricks-managed MLflow capabilities is a centralized model store?

A data scientist has developed a scikit-learn random forest model model, but they have not yet logged model with MLflow. They want to obtain the input schema and the output schema of the model so they can document what type of data is expected as input.

Which of the following MLflow operations can be used to perform this task?

A data scientist has computed updated feature values for all primary key values stored in the Feature Store table features. In addition, feature values for some new primary key values have also been computed. The updated feature values are stored in the DataFrame features_df. They want to replace all data in features with the newly computed data.

Which of the following code blocks can they use to perform this task using the Feature Store Client fs?

A)

B)

C)

D)

E)

A machine learning engineering team wants to build a continuous pipeline for data preparation of a machine learning application. The team would like the data to be fully processed and made ready for inference in a series of equal-sized batches.

Which of the following tools can be used to provide this type of continuous processing?

A machine learning engineering team has written predictions computed in a batch job to a Delta table for querying. However, the team has noticed that the querying is running slowly. The team has alreadytuned the size of the data files. Upon investigating, the team has concluded that the rows meeting the query condition are sparsely located throughout each of the data files.

Based on the scenario, which of the following optimization techniques could speed up the query by colocating similar records while considering values in multiple columns?

A data scientist is using MLflow to track their machine learning experiment. As a part of each MLflow run, they are performing hyperparameter tuning. The data scientist would like to have one parent run for the tuning process with a child run for each unique combination of hyperparameter values.

They are using the following code block:

The code block is not nesting the runs in MLflow as they expected.

Which of the following changes does the data scientist need to make to the above code block so that it successfully nests the child runs under the parent run in MLflow?

A machine learning engineer wants to move their model versionmodel_versionfor the MLflow Model Registry modelmodelfrom the Staging stage to the Production stage using MLflow Clientclient.

Which of the following code blocks can they use to accomplish the task?

A)

B)

C)

D)

E)

A data scientist set up a machine learning pipeline to automatically log a data visualization with each run. They now want to view the visualizations in Databricks.

Which of the following locations in Databricks will show these data visualizations?